The Classifier

This is a convolutional neural network trained to classify images of the Rock Paper Scissors game calls. It has been trained on a fairly small image data set containing 2188 RGB images. Below you can find information about its creation and evaluation.

Image preparation

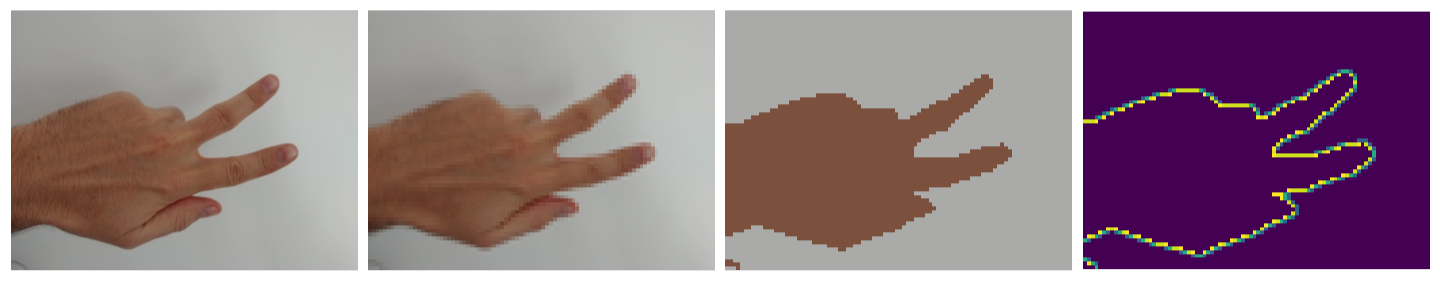

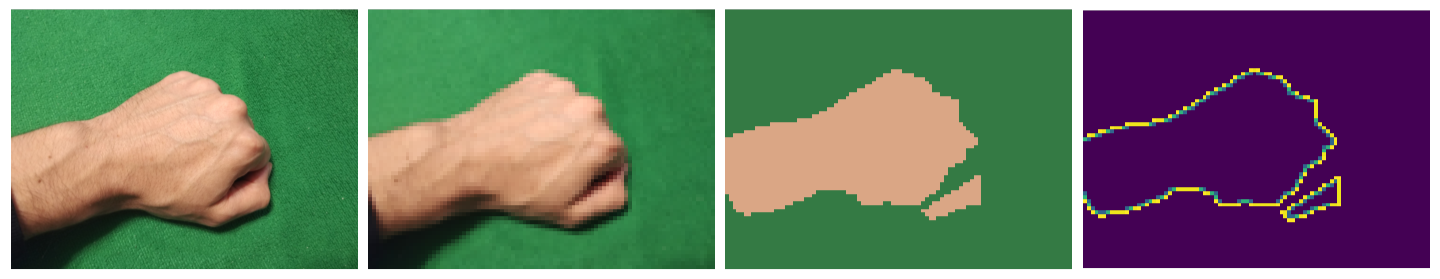

To maximize the performance of the network, the train/test images were preprocessed to retain only useful information. This was a three-step process:

- resizing to 90x60 pixels - this will reduce the training time, which is exponentially dependent on the image size

- clustering - each pixel of each image has been assigned to one of two clusters based on its placement in the RGB space; color information for each pixel has been replaced by its respected cluster centroid color information

- edge recognition - application of edge recognition filter

The resulting images show only the outline of the gesture which is really what it takes to classify them.

Convolutional neural network classifier

The model is composed of the following layers:

- 1st convolution

- 128 filters with size 3x3, ReLU activation

- 1st pooling

- size 2x2

- 2nd convolution

- 64 filters with size 3x3, ReLU activation

- 2nd pooling

- size 2x2

- 3rd convolution

- 64 filters with size 3x3, ReLU activation

- 3rd pooling

- size 2x2

- 4th convolution

- 32 filters with size 3x3, ReLU activation

- 4th pooling

- size 2x2

- Flatten

- Dense layer

- 128 units, ReLU activation

- Dropout

- 50%

- Output layer

- 3 units, softmax activation

To reduce overfitting, an image generator has been used. It applied small random transformations to train images: rotation, shift, shear and zoom. With early stopping applied, it took 15 epochs to train the model.

Model evaluation

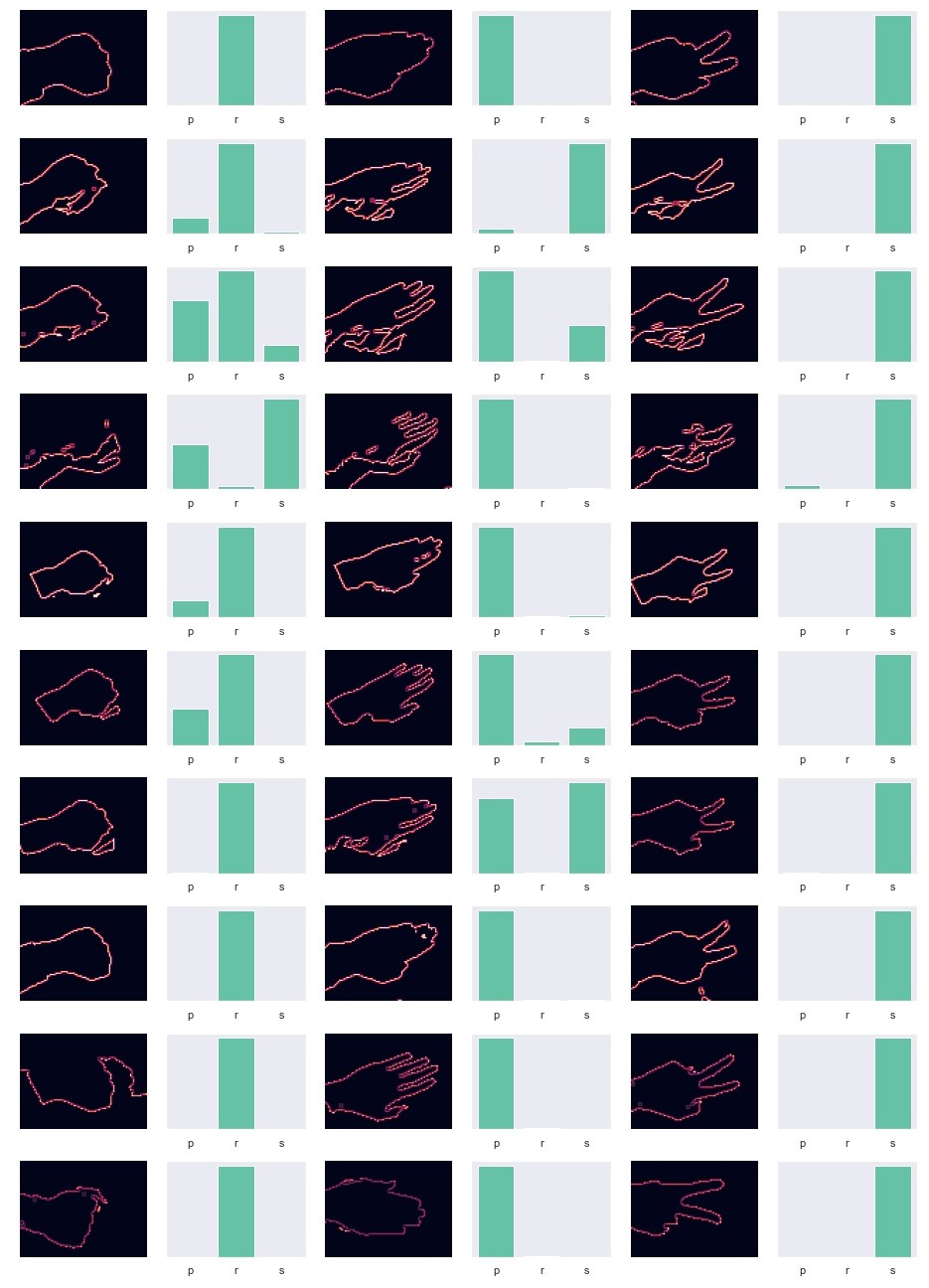

Here are the classification results for images from outside of the train/test set:

In some cases, the model is struggling to make a correct classification. This is caused by image preprocessing issues. When the input picture has a not uniform background or the background has the same color tone as the hand, the edges of the gesture are not correctly recognized.

How to improve the user experience of the app

To improve classification results, the following advice should be taken into account:

- use your right hand to take a landscape picture of your left hand; this ensures correct hand orientation

- take the picture on a uniform, contrasting background (preferably black or white)

- try to avoid capturing shadows

Data set for training

CNN model has been trained on a dataset available on Kaggle under license: CC BY-SA 4.0